Dev Documentation¶

This part is a brief description of some key functions

Experiment module¶

- this module contains mainly two classes

Experiment is an entry point to start and end the recording of the power consumption of your Experiment

ExpResult is used to process and format the recordings.

Both classes uses a driver attribute to communicate with a database, or read and write in json files

The module can be used as a script to print the details of an experiment

python -m deep_learning_power_measure.power_measure.experiment –output_folder AIPowerMeter/measure_power/

- deep_learning_power_measure.power_measure.experiment.Experiment(driver, model=None, input_size=None, cont=False)¶

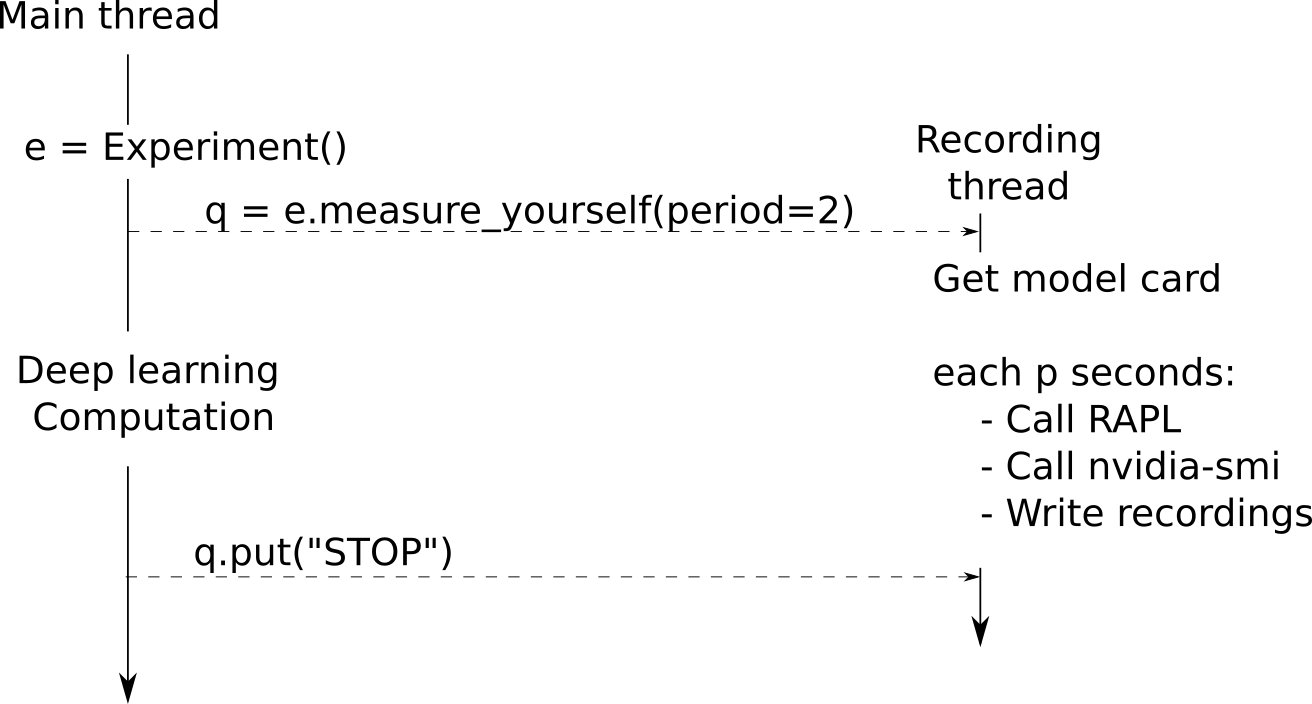

This class provides the method to start an experiment by launching a thread which will record the power draw. The recording can be ended by sending a stop message to this thread

In practice, a thread is launched to record the energy of your program.

Interaction between the experiment and the recording threads¶

If you want to record also the time, accuracy and other valuable metrics, the simplest way is to do it in the main thread and then, to interpolate with the timestamps of the energy recordings if an alignement is needed.

rapl_power module¶

Handling of the CPU use and CPU consumption with RAPL

- deep_learning_power_measure.power_measure.rapl_power.get_mem_uses(process_list)¶

Get memory usage. psutil will be used to collect pss and uss values. rss is collected if pss is not available some info from psutil documentation:

USS : (Linux, macOS, Windows): aka “Unique Set Size”, this is the memory which is unique to a process and which would be freed if the process was terminated right now

PSS : (Linux): aka “Proportional Set Size”, is the amount of memory shared with other processes, accounted in a way that the amount is divided evenly between the processes that share it. I.e. if a process has 10 MBs all to itself and 10 MBs shared with another process its PSS will be 15 MBs.

RSS : On the other hand RSS is resident set size : the non-swapped physical memory that a task has used in bytes. so with the previous example, the result would be 20Mbs instead of 15Mbs

- Args :

process_list : list of psutil.Process objects

- Returns:

mem_info_per_process : memory consumption for each process

- deep_learning_power_measure.power_measure.rapl_power.get_cpu_uses(process_list, period=2.0)¶

Extracts the relative number of cpu clock attributed to each process

- Compute for each process p in process_list t over the period

relative cpu usage : ( cpu time of p ) / (cpu time of the whole system)

absolute cpu usage : cpu time of p

- Args:

process_list : list of process [pid1, pid2,…] for which the cpu use will be measured pause : sleeping time during which the cpu use will be recorded.

- Returns:

cpu_uses = {pid1 : cpu_use, } where cpu_use is the percentage of use of this cpu with the respect to the total use of the cpu on this period

- deep_learning_power_measure.power_measure.rapl_power.get_power(diff)¶

return the power accumulation of the provided pair of rapl samples for the different RAPL domains

- Args:

diff : difference between two RAPL samples

- Returns:

Dictionnary where each key correspond to an RAPL (core, uncore, ram) domain and the value is the accumulated energy consumption in Joules https://greenai-uppa.github.io/AIPowerMeter/background/background.html#cpu-and-rapl

gpu_power module¶

This module parses the xml provided by nvidia-smi to obtain the consumption, memory and SM used for each gpu and each pid.

- deep_learning_power_measure.power_measure.gpu_power.get_nvidia_gpu_power(pid_list=None, nsample=1)¶

Get the power and use of each GPU. first, get gpu usage per process second get the power use of nvidia for each GPU then for each gpu and each process in pid_list compute its attributatble power

- Args:

pid_list : list of processes to be measured

nsample : number of queries to nvidia

- Returns:

- a dictionnary with the following keys:

nvidia_draw_absolute : total nvidia power draw for all the gpus

per_gpu_power_draw : nvidia power draw per gpu

per_gpu_attributable_mem_use : memory usage for each gpu

- per_gpu_per_pid_utilization_absoluteabsolute % of Streaming

Multiprocessor (SM) used per gpu per pid

- per_gpu_absolute_percent_usageabsolute % of SM used per gpu

for the given pid list

- per_gpu_estimated_attributable_utilizationrelative use of SM

used per gpu by the experiment